Back

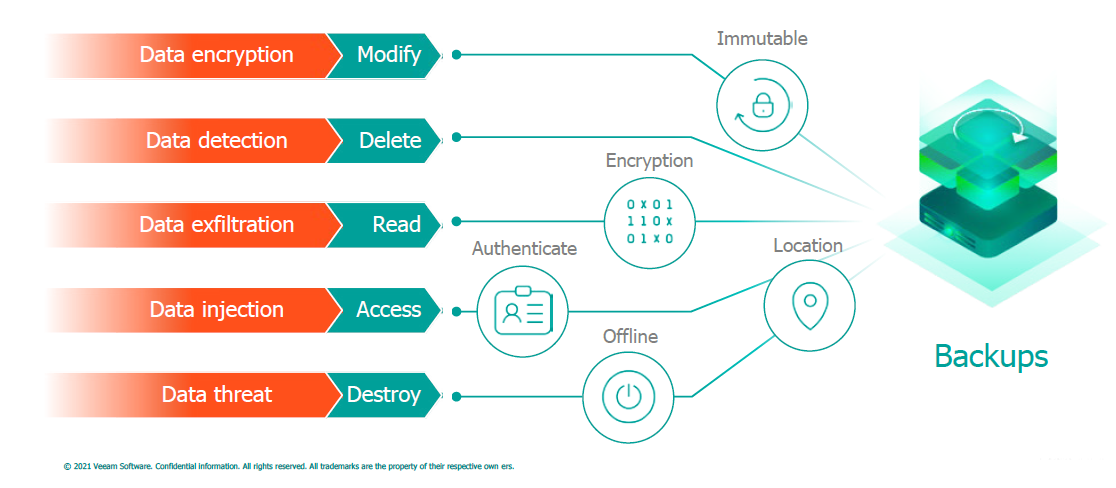

In the case of an attack on infrastructure aimed at damaging data, backups become a tool that allows for the fastest and most reliable recovery to a point in time where the infection did not occur. It should also be noted that the need to resort to backups usually signifies that email analysis systems (as the most common attack vector), antivirus programs, authentication systems, access control, or network segmentation have failed.

Using backups negates the goal set by ransomware, which is to extort a ransom from the victim, hence attacks are increasingly observed that aim to selectively target the backup system and damage backup copies as one of the initial attack elements. For this reason, when designing infrastructure, it is important to employ best practices in both architecture and functionality usage to minimize the risk of backup loss.

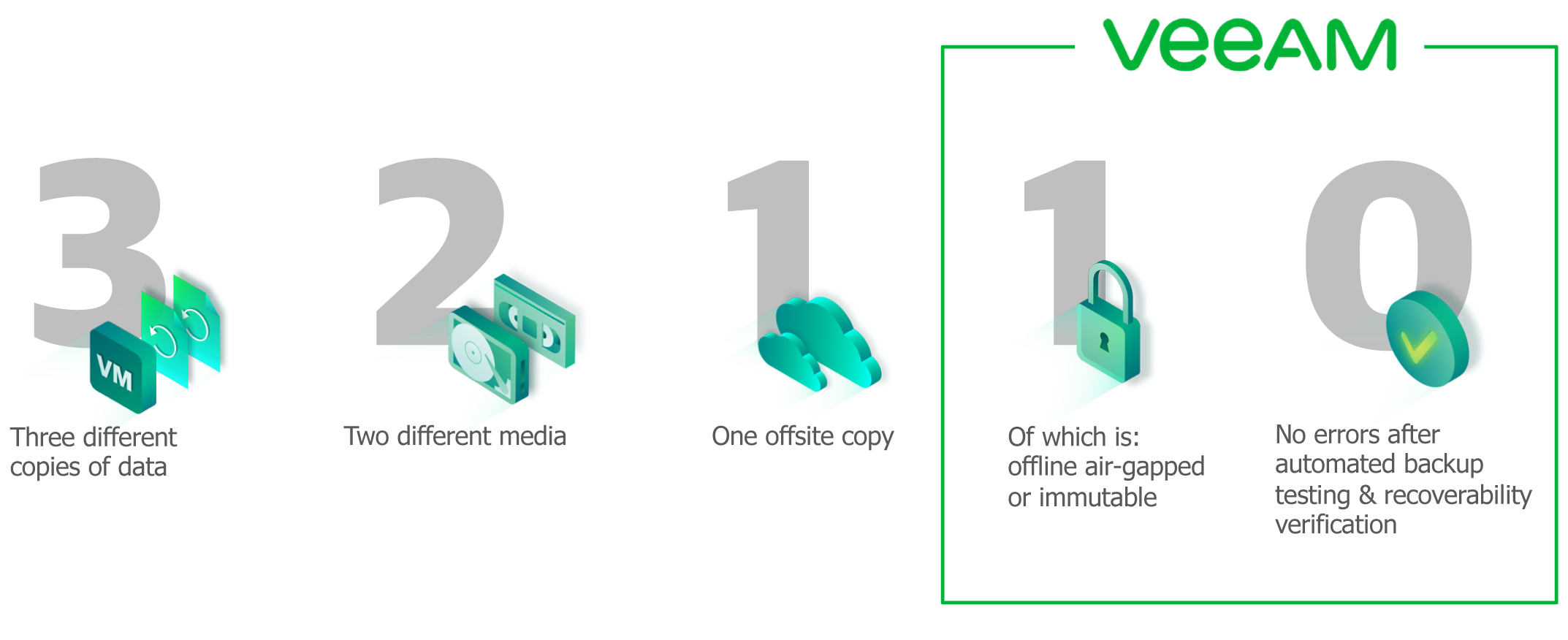

Arguably the simplest and most effective way to eliminate the threat arising from almost any failure or type of attack is to follow the 3-2-1 principle – or its extended version, 3-2-1-1-0. It consists of the following:

3 – storing three copies of data: the first copy is the production data, and the other two are backup, archival, or data replicas. Production data will naturally be the primary source of retrieval, but in case of unavailability, damage, or loss, reading from the backup copy becomes possible. To eliminate the potential problem of damage to one of the copies, it is recommended to have a minimum of two copies, especially since in the event of a data center failure, the primary backup copy is often lost along with the production data.

2 – using two different storage media. First and foremost, it should be noted that production data and backup copies should not be stored within the same array. Hardware failure or other damage to the array leads to the loss of both production and backup data. However, repository architecture should also be considered to impede the spread of ransomware throughout the infrastructure. Storing data on a primary repository based on Windows, additional repository could be based on Linux. If storing data on hardware deduplicators like HPE StoreOnce, instead of using recognized protocols like NFS or CIFS, HPE's own Catalyst protocol should be used. When object-based arrays are an option, they further hinder ransomware, as they lack a familiar file system, unlike the more common block-based backup arrays.

In addition, object-based arrays use another protocol, S3, and standard user domain authentication is not used. By utilizing copy jobs between repositories built into Veeam, native Veeam protocols are used, which, when properly configured on servers and set as the only allowed traffic, require knowledge of the protocol on the ransomware side to breach the additional repository. Mixing operating systems, protocols, file/block/object-based arrays forces ransomware to understand and propagate across a wider range of systems, increasing its complexity.

1 – one copy should be stored offsite. It remains an individual administrator's decision to determine what constitutes sufficient offsite. It should be assumed that it must be an environment unaffected by the same factors as the production infrastructure.

1 – this is an extension of the basic 3-2-1 principle. One of the copies should be immutable, i.e., non-deletable – so-called immutable. The immutability of the copy is intended to prevent data encryption by ransomware or its corruption or deletion by other types of malware. The most common way to achieve immutability is to send the backup to tape media stored in an external location. However, it's important to remember that tape is a sequential device and doesn't allow for easy granular data recovery, which might be necessary in case of failure. It's worth considering the option of storing data in the cloud, where one of the functionalities of the S3 protocol is Object Lock, allowing the establishment of a protection time against modification and deletion. This allows defining a policy where the last month is protected, so even in the event of a successful attack on the infrastructure, the last month's backups would still enable data recovery.

Furthermore, using the cloud and the S3 protocol offers the benefits of object storage, as mentioned earlier. Veeam also introduces the option to use the immutable flag on repositories based on Linux, which significantly facilitates the implementation of this feature in existing infrastructures. During repository definition, the root account is used once to establish the immutable flag, and then Veeam services run using a user with lower permissions for backup purposes. Such a user cannot remove or shorten data protection time, so even if the backup system becomes an attack vector, a compromised administrator account doesn't allow deleting backup copies.

0 – meaning zero errors during recovery. Another element extending the 3-2-1 formula, imposing the duty on administrators to cyclically verify the recoverability of backup copies. Often, after infecting a system, ransomware remains inactive to spread across as many servers as possible. During this time, normal backup policies are executed, so infected machines are secured and often archived as well. In extreme cases, file damage or encryption may occur, which won't be visible to the administrator until such a server is restarted. Manual tests verify whether a machine will initialize correctly, whether there will be file damage errors, and whether applications function properly. These types of tests are typically conducted in a dedicated environment to reproduce the application topology and achieve separation from the production environment. However, with a significant volume of machines in the environment, these manual tests are impractical due to the administrator's time constraints. This is why Veeam introduced the SureBackup functionality, which automates such tests.

By utilizing the virtual lab component, Veeam allows for the automatic creation of an isolated network zone in the existing infrastructure, eliminating the need to dedicate servers to the test environment.

Within the isolated zone, it's possible to define multiple networks to replicate the network topology, allowing verification of the functionality of distributed applications, not just individual servers. The testing procedure utilizes the Instant VM Recovery feature, allowing the direct launch of a virtual machine from a deduplicated and compressed backup copy.

Next, the network adapters of such machines are swapped to ensure network isolation. In the next step, the machines are launched on the hypervisor, the VM heartbeat is verified, whether the machine responds to a ping for its IP is checked, and application tests are performed – both those built into the software and any scripts defined by the administrator. After the tests are finished, the environment is automatically powered off to avoid consuming the resources of the virtualization host.

Additionally, as an extension of the tests, the functionality of verifying the backup content by performing an antivirus scan has been provided. Secure Restore utilizes the existing antivirus software client in the infrastructure to scan the contents of the backup copy using the latest signatures. As mentioned earlier, when ransomware infiltrates the infrastructure and infects a machine, before it's activated or detected, it's secured alongside the production machine.

Once awareness of a specific threat is reached by the antivirus vendor, a signature is provided to detect the threat. In case of a failure or a ransomware attack on the production infrastructure, if newer signatures are available, there's an opportunity to reevaluate whether the backup should be recovered or not – whether it's infected or not, and either recover it to an isolated area and manually remove the malicious code or revert to a previous restore point. This knowledge helps ensure that the recovered machine doesn't lead to a reinfection of the production environment.

As can be easily observed, even adhering to the 3-2-1-1-0 principle alone helps secure the backup infrastructure by establishing separation between environments and redundancy in backup copies, while simultaneously mitigating the threats arising from accidental or intentional data damage. Adding functionalities offered by modern backup solutions such as automated recovery testing, cloud utilization, object storage, integration with various data transfer protocols, support for immutable flags, or the ability to use antivirus software during recovery in case of failure – we obtain a secure backup that we can rely on when the need to recover data after an attack arises.

Veeam® is a leader in data backup, recovery, and data management solutions that provide modern data protection. The company offers a comprehensive platform designed for cloud, virtual, physical, SaaS, and Kubernetes environments. Veeam customers can be confident that their applications and data are protected from ransomware, threats, and malicious manipulations, while always being available on the simplest, most reliable, efficient, and flexible platform in the industry. Veeam protects over 400,000 customers worldwide, including 81% of the Fortune 500 list and 70% of the Global 2000 ranking. Veeam's global ecosystem includes over 35,000 technology and alliance partners and service providers, with local offices in over 30 countries.

-----------------------------

Author: Tomasz Turek - Senior Systems Engineer at Veeam

Solutions architect specializing in security, management, and disaster recovery planning for physical and virtual infrastructures based on VMware, Microsoft platforms, as well as cloud environments. Responsible for technical support for major projects, advising on best practices for securing physical and virtual machines, designing backup environment architecture, and data recovery policy planning.

|

Secretary: +48 58 621 11 00 Mobile: +48 605 126 099 Fax: +48 58 621 10 30

|

|

E-mail:

|